Hello! I am Nikos

I am currently a student researcher in Google DeepMind, working on continual learning and model merging with foundation models. I completed my PhD in Machine Learning at École Polytechnique Fédérale de Lausanne (EPFL), where I was fortunate to be advised by François Fleuret and Pascal Frossard. My research interests revolve around model merging, multi-task and continual learning. From December 2023 to December 2024, I interned in Google DeepMind in Zurich, where I worked on text-to-image generation.

Before coming to Switzerland, I completed my undergraduate studies in Electrical and Computer Engineering at the National Technical University of Athens in Greece. I conducted my thesis under the supervision of Petros Maragos, focusing on the intersection of tropical geometry and machine learning.

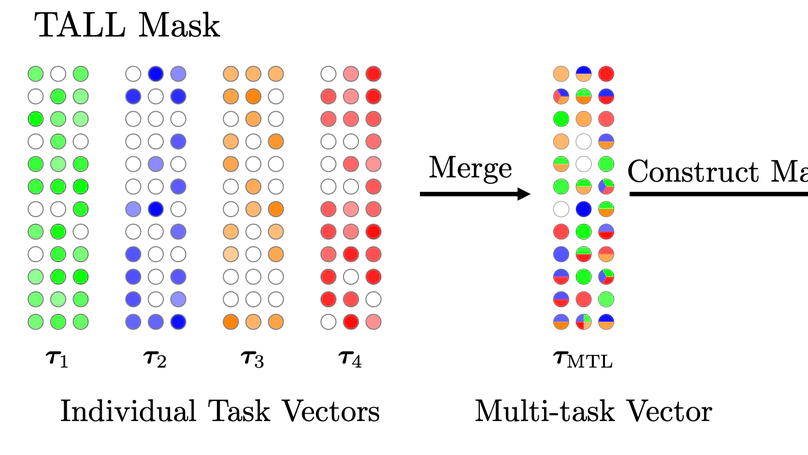

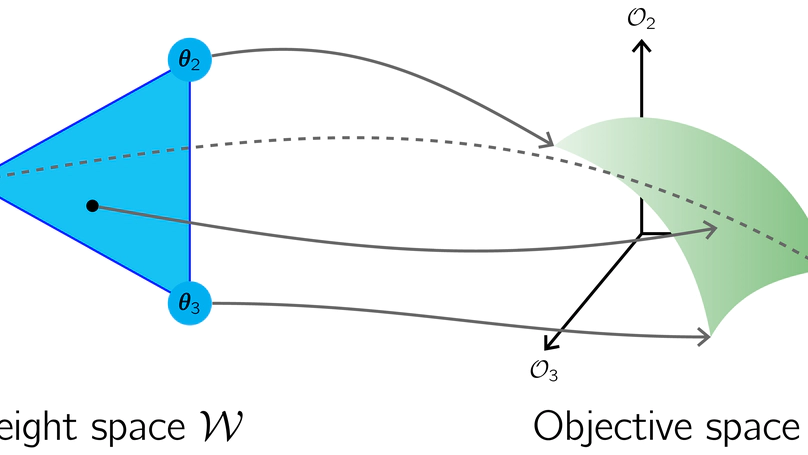

- Model Merging

- Multi-Task Learning

- Continual Learning

-

PhD in Computer Science, 2025

École Polytechnique Fédérale de Lausanne

-

MEng in Electrical Engineering and Computer Science, 2020

National Technical University of Athens

Publications

Contact

dimitriadisnikolaos0[at]gmail.com